Introduction

On August, 1st 2022, I found myself in the eye of a storm. As a protocol engineer at Nomad, I watched in real-time as $190 million was drained from our bridge in one of the largest hacks in DeFi history. The experience was surreal, devastating, and ultimately, transformative.

That moment sparked the creation of Phylax Systems, driven by a mission to solve security so that crypto can win. It’s not just about safeguarding assets; it’s about helping our industry restore its credibility and achieve its full potential. Phylax is dedicated to developing intuitive, open-standard products grounded in first-principles thinking, making security accessible to everyone.

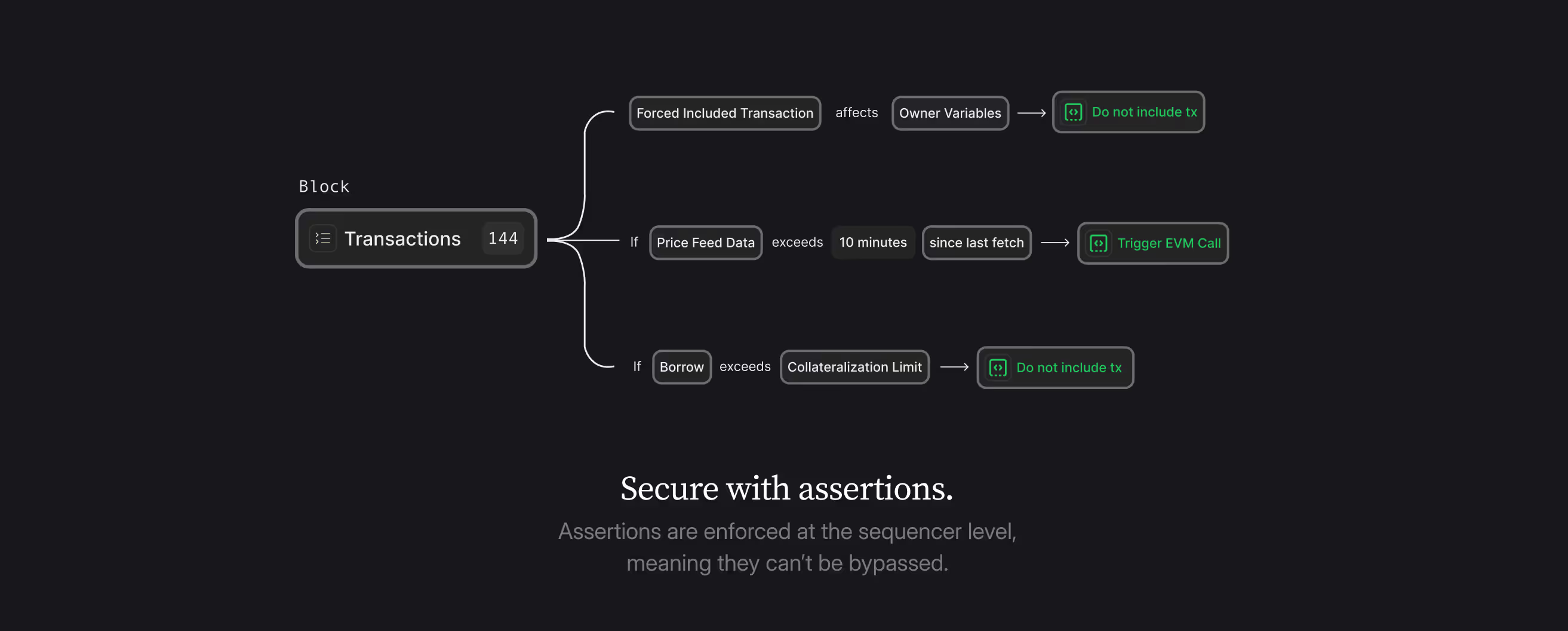

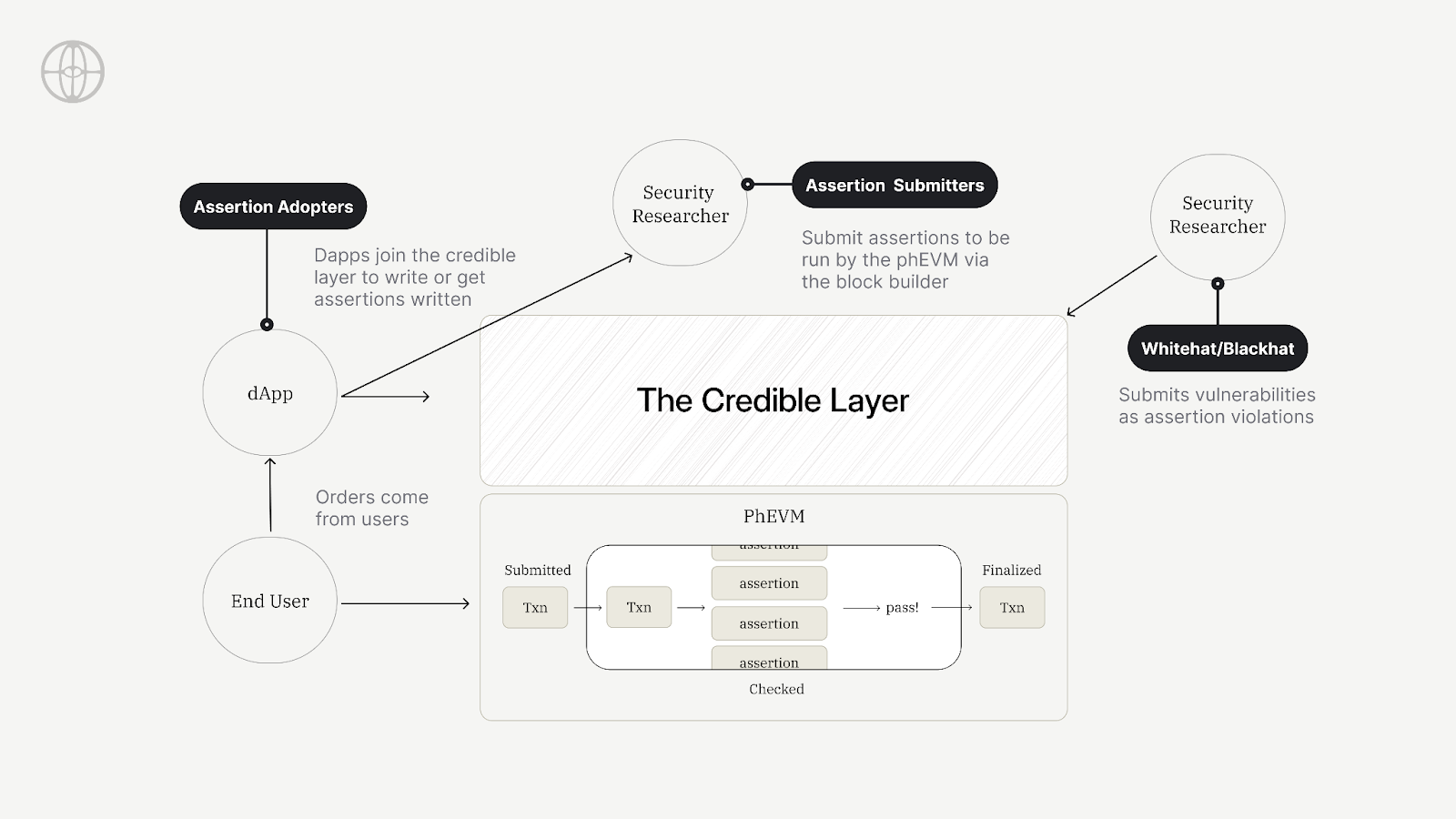

Our flagship product, the Credible Layer, integrates into a network’s base layer, allowing dApps to assert what a hacked state looks like to them. Network participants, such as block builders or sequencers, then verify these assertions against every transaction before it’s finalized, ensuring no transaction that would result in a hacked state ever occurs. Read on for the details.

The Nomad hack was just one in a long line of security breaches that have plagued our industry. Each one erodes trust, hinders adoption, and provides ammunition for skeptics and regulators alike. But what if we could prevent these hacks before they happen? What if we could build a security layer so fundamental, so embedded in the blockchain itself, that circumventing it would be nearly impossible?

In this blog post, I’ll explore the urgent need for enhanced security solutions, examine the limitations of existing options, and introduce what Phylax is bringing to market. We'll also share who we are as a company and why we believe our approach – what we call Credible Security, is the key to unlocking crypto's true potential.

But before we dive in, I want to mention that we're growing our team to tackle this monumental challenge and if you're passionate about securing the future of crypto, we're hiring.

Problem

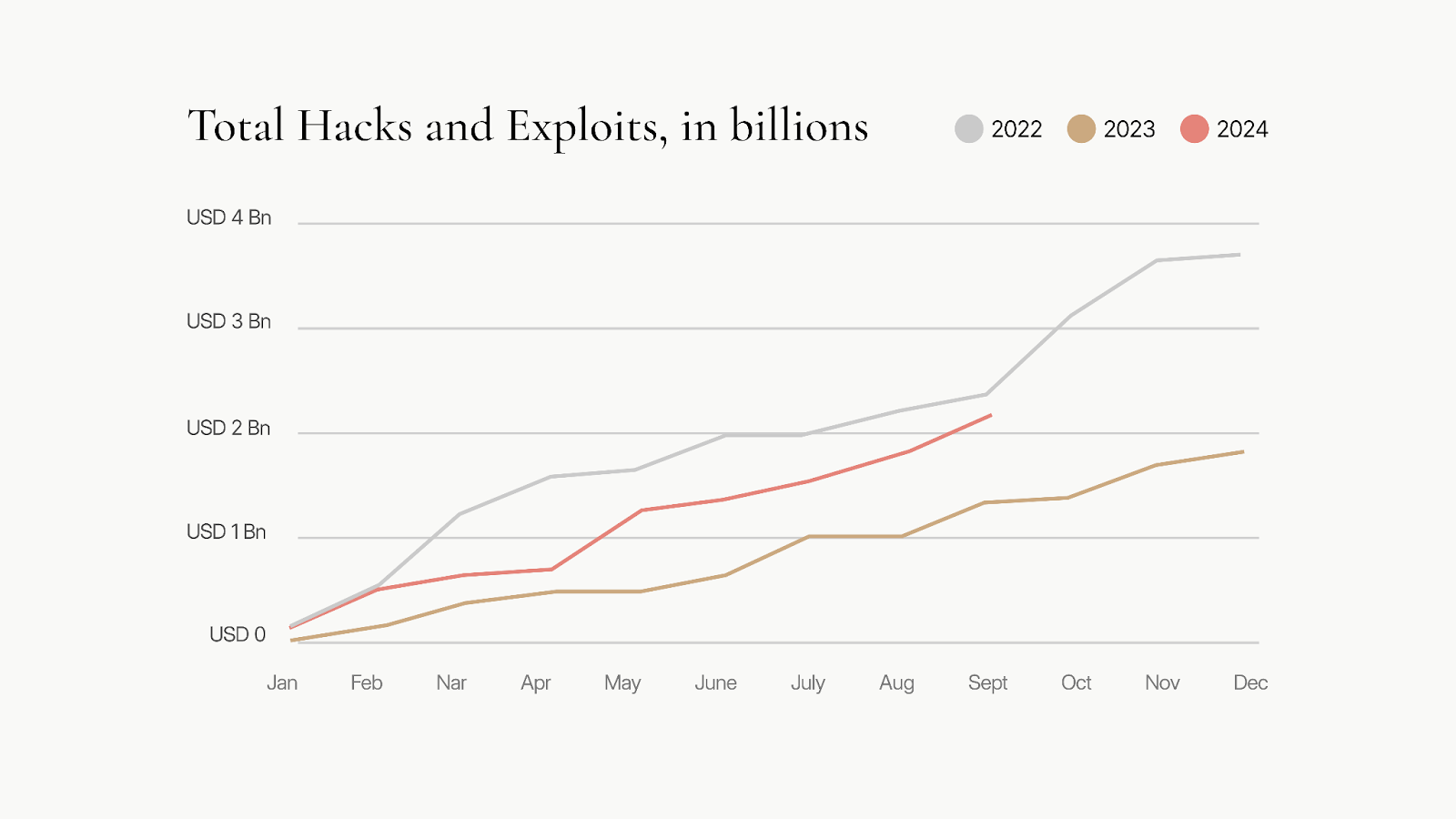

With more than $10B lost in preventable hacks and a majority of them focusing on DeFi, this issue is a barrier to mainstream adoption and trust.

One of the core complexities of hack prevention is the sheer number of different vectors that one can attack a protocol from, ranging from smart contract vulnerabilities to private key leakage, oracle price manipulation, or even exploitable compiler bugs.

Due to this complexity, it’s very hard to identify the vulnerabilities before a hack occurs and then prevent them in an accurate and timely fashion.

Ramifications

Here is why this all matters (even to those of you who haven’t experienced any sort of hack before).

- Hacks continue to dominate the narrative and most people’s perception of the industry.

- Regulators use the perceived lack of security as an excuse to pursue and intensify Operation Choke Point 2.0. See it in action in a recent Financial Services hearing.

- The industry hasn’t attracted enough capital from traditional sources to enable wider adoption and new use cases. In simple terms, we haven’t reached escape velocity and I believe it is largely due to the security concerns that still plague our new sector.

Current Solutions

Market

The existing security solutions can be broadly categorized into two groups:

- Large-scale analysis of on-chain transactions in the wild in an attempt to identify tell-tale patterns that occur before a hack happens.

- Monitoring of the protocol’s contracts and triggering alerts or automatic mitigation actions once a transaction that looks like a hack is detected on the blockchain.

The first category of activities is based on the observation that most hacks follow a specific preparation pattern before they occur (e.g. funding a contract with funds from Tornado Cash). The second category of activities is valid only if the attack requires multiple transactions (that are not included in the same block) and can act as a damage control mechanism. While it is beneficial to know that an attack has occurred, the utility of this knowledge is limited if nothing can be done to mitigate it.

Unless the vulnerability is reported before the exploit, it is usually too late.

How dApp Monitoring brought me to the concept for the Credible Layer:

Preventing hacks in a dApp starts with visibility—being able to monitor its behavior effectively. Monitoring can occur at two levels: client-side, where tools like wallet scanners check transactions before submission, and server-side, where network-level monitoring ensures ongoing security after the transaction is processed.

I focused on server-side monitoring, which provides deep visibility into the dApp’s real-time operations within the blockchain.

One thing that I identified early on when contributing to Foundry over two years ago, as a user of dapptools, was the huge increase in efficiency I got from using a tool that leverages the skills I already have, instead of having to learn new languages or concepts. Dapptools and later Foundry, became a staple in development because it encapsulated all the complexity of smart contract testing and leveraged a language that the developers already knew, Solidity. It was less mentally taxing as the user wrote tests in the same way as they wrote their code.

Today, most dApp developers are familiar with fork testing, where the developer writes tests against the on-chain state of their dApp. If a developer writes fork tests in Foundry and then runs them in a loop against the latest block, they’ve essentially built an alerting system. It monitors the live state of the dApp and every test is analogous to an alerting rule.

Then it struck me: this alerting system could also be used to prevent hacks if changed a little here and there. That’s when I started exploring how hacks can be avoided altogether.

Hack Prevention

As any DevOps professional will tell you, the goal of observability is not to watch your server blow up in real time but to predict how and why it will blow up in the future so that you have time to mitigate the issue without the users experiencing any degradation.

“Hack Prevention needs to be preventative. Methods that can be circumvented will be circumvented.”

With the proliferation of MEV, the industry started seeing what we call “generalized front-runners,” bots who simulate transactions in live on-chain environments to evaluate if they can copy the transaction and get value by sending the same transaction before the original. As expected, these front-runners started front-running hacks, by chance, prompting teams to start offering front-running as a hack prevention technique. Define your rules, we evaluate transactions in the mempool and if they violate these rules, we front-run the transaction with a mitigative transaction. This effectively pauses a protocol before the hack happens.

Unfortunately, a hacker can use a private mempool these days, like Flashbots or Blocknative. Even in the unlikely scenario that all private mempools start collaborating with these hack prevention companies, we are bound to see what I have coined “Dark Mempools.” These mempools will be tied to validators in exotic jurisdictions and they will offer a very unique service: we will include your transaction, no questions asked.

It’s a race to the bottom. It’s a cat-and-mouse game where the cat is destined to lose in the end. It’s a losing strategy.

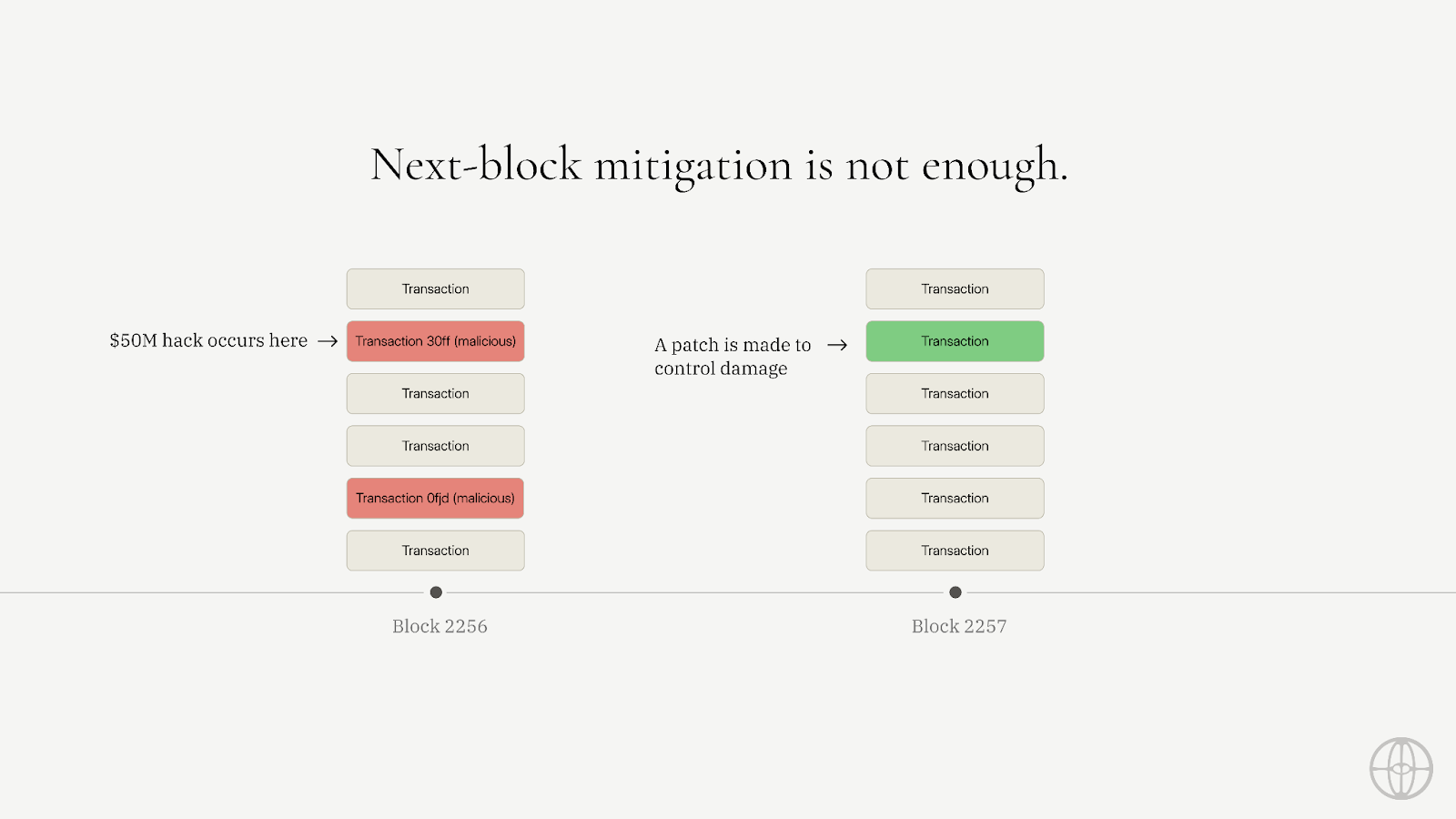

Moreover, we started seeing various services that offer “next-block mitigation.” A more relaxed version of the front-running I described above.

If X happens at block N, we will do Y at block N + 1.

These players usually reference the Euler or Nomad hacks as examples of what they could have prevented.

The issue here is that they have no reason to perform the hack over an extended number of blocks. An even more sophisticated protocol engineer would be able to deploy a malicious smart contract and perform all the required actions in a single block. As operational security evolves in space, hackers will be pushed to operational excellence, rendering these solutions obsolete.

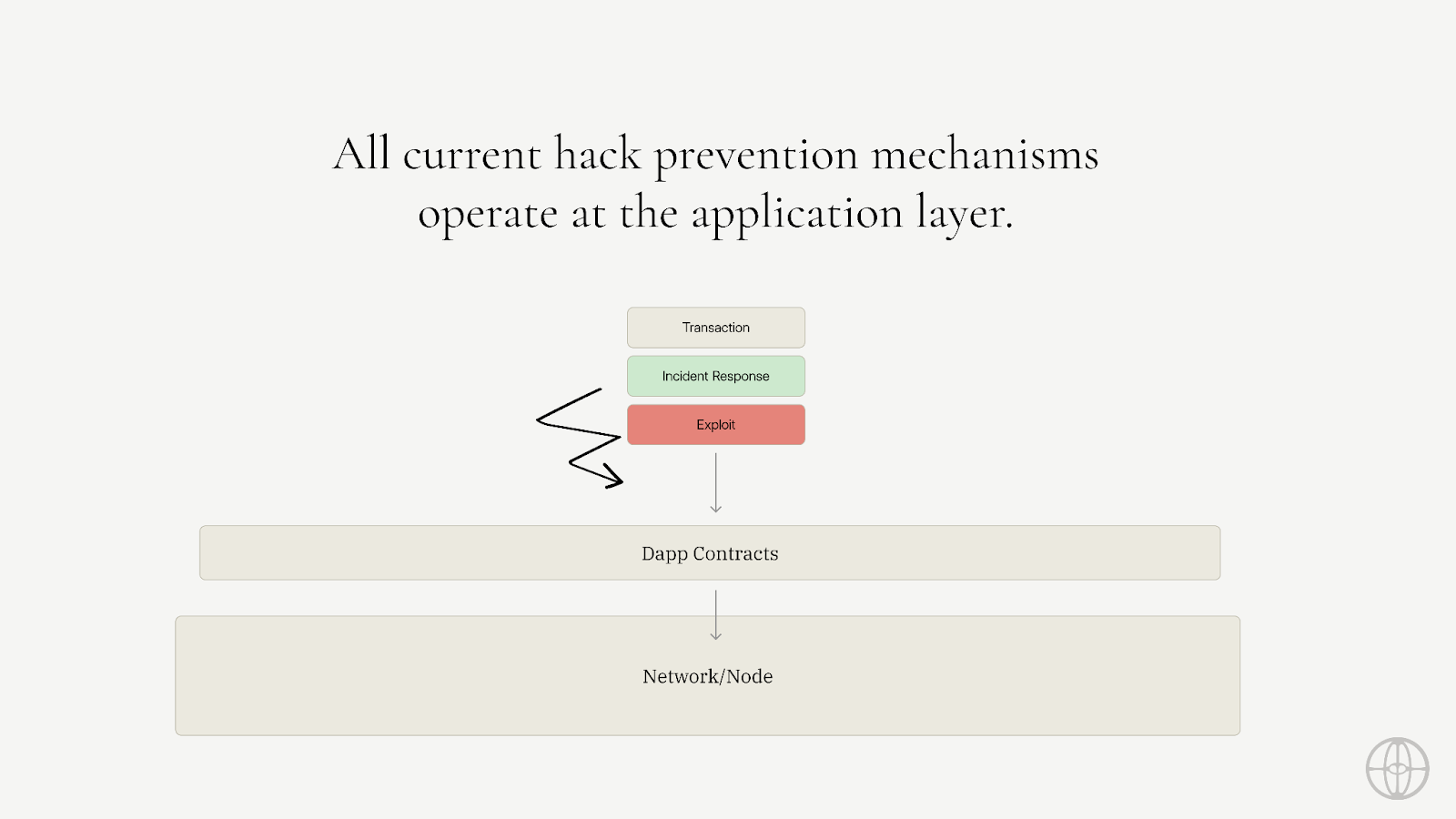

If you want to implement true hack prevention, you can’t operate at the application level. You have to go deeper, you have to operate at the base layer.

AI and Fuzzing are additive, not silver bullets.

Another very common design pattern is using AI, fuzzing, or symbolical property-based testing to find vulnerabilities in deployed smart contracts.

Although there are well-understood patterns in vulnerabilities (e.g. reentrancy), I concluded that the effort in developing and maintaining these solutions was much more than building a platform that facilitates developers to define what a hack is. Given that the creators of the protocols should be the protocol experts, I found that it’s much more impactful to build tools to make it very simple for them to define what a hack means for their protocol. There is certainly a place for fuzzing and similar techniques, but in my mind, they are additive.

Finally, there is a challenge in making AI and Fuzzing scalable. The more effort you put into the computation, the more expensive and slower it becomes. This means that the more accurate it becomes, the more expensive it is to scale, while also making it harder (I think impossible) to do any real-time mitigation, like with the MEV approach I mentioned above.

The techniques are too probabilistic while requiring huge engineering effort. All in all: a suboptimal ROI for the work needed.

Introducing Credible Security

That brings us to what I believe is a fresh approach to security which I first introduced back in October 2023 (startups take some time to kick off).

We need a system that is:

- Embedded to the base layer so that it’s impossible to circumvent.

- Provable so that dApps and their users can prove the existence of security and the base layer has a social incentive and corresponding “slashing” to honor the security properties of the dApps.

Essentially, Credible Security: Provable + Embedded

Both components are critical to align incentives between the dApps and the underlying network, while also creating a system that is proactively secure, not retroactively controlling damage.

The current paradigm that has existed up until now is what we have coined “Trusted Security.”

Trusted Security is a system that is:

- Application-level, constrained to what an actor at that level can do, which is to either react at some block state or at best attempt MEV-related strategies.

- Trusted, which means that there is no objective definition of a dApp’s security, but it’s rather the social layer that arrives at a consensus when a hack happens.

And Trusted Security: Observable (not Provable) + Application-level (not Embedded)

Why is Credible Security Important?

The industry is at a critical juncture in terms of maturity and adoption. Even with the recent hostile US regulatory stance, we are seeing clear signs of general adoption, be it in the form of ETFs or the widespread adoption of stablecoins. The proliferation of billions of dollars worth of hacks will leave users confused and helpless. It is one of the last issues holding our industry back.

While it's often emphasized that our industry's infrastructure can support far more users than currently engage with crypto apps, the real question is: are we truly prepared for an influx of new consumer users?

In my view, security and UX are the two main reasons the answer to my question would be no. Not only is blockchain UX still more technically complex than it should be, but the risk of losing your funds is exorbitantly high for the average user. Even if you are an institution and you follow all the best practices as a user (e.g. not opening scam links), there is still a considerable risk that your capital will be lost through some vulnerability in the dApp you are deploying to. Moreover, due to the decentralized nature of blockchains, there is no recourse. If something happens on Ethereum, it happens. End of story. There are no second chances.

The first network, either a rollup on Ethereum or some other L1 blockchain, that solves security in some fashion, will attract considerable capital. That capital will be deployed in much larger quantities, simply because the risk they have to underwrite regarding hacks will be much lower. That capital in turn will fuel existing and new use cases, enabling much more organic adoption by users.

Simply put, we need to solve security for crypto to win.

The Phylax Systems Vision and Mission

Our Vision:

We solve security by making products that are simple to use, built on open standards, and guided by first principles thinking.

Design Principles

1. Open Standards

Open source methodologies have always been a force multiplier in tech since the advent of the Internet. It has enabled the frictionless sharing of ideas and code, enabling close collaboration between groups of people who may have a huge geographical distance between them but hold similar cultural value systems.

Building on open standards enables users to freely switch between applications and services, without the fear of lock-in. Products win because of the value they provide, not because of the activation energy required for a user to transfer and modify their workflows to a competing product. On top of that, the innovation and value that we produce stays in the industry. These patterns and paradigms are as valid as they were in 2015, and as they will be in 2035. Tools and products may change, but every generation builds on top of the last.

In security, there is an added benefit. By using open-source tools, the space as a whole continues to advance. A good set of tests written in Forge for Balancer can suddenly be easily re-purposed by a newer dApp built by less experienced engineers.

Security systems built on open source principles are not only good for traditional reasons, it will inspire trust through transparency, an important north star of decentralized tech.

2. Intuitive to Use

The second design paradigm that drives how we build our products is an obsession with user experience. Great products are built by people and organizations that stress every single detail in the user journey, from the first installation to that little feature that a small subset of the users will leverage.

When building products, you have to manage complexity, and there is a tension between internal and external complexity. Internal complexity is the engineering complexity that is encapsulated into the product, while external complexity is the complexity that is exposed to the user and usually translates into a worse UX.

Great products, in my opinion, are built by decreasing external complexity as much as possible, even if that means increasing the internal complexity and the amount of great engineering that is needed.

You must wrap the product around the user, rather than the user around the product. It’s important to correctly identify the affordances and context that the expected user already has and leverage these, instead of requiring the user to gather new context to use the product. As my ex-boss in Balena used to say: “Documentation is a failure of the product.” While I disagree with the dogmatic perspective there, I think the spirit strikes at the heart of what makes great products great.

Another way to build intuitive developer-oriented products is by “productionising” the collective experience of your organization into the product itself. The users don’t need to read the various raw data points of your application to understand if it’s healthy or not. You should be able to encode that into the product itself and let them know if the application is unhealthy and suggest ways to fix that. As the expert of your product, you encode this information once (internal complexity) so that your users don’t have to become experts in your product (external complexity).

This is usually table stakes in consumer products due to a lack of technical knowledge on the consumer segment’s behalf. It is the reason why Apple was so successful, but usually an afterthought in the echelons of developer tooling. I think this is a fundamentally different way of building developer products and why we think that users will find our security products to be a ‘blissful experience,” even if the subject of our products is fundamentally complex.

3. Question Dogma

Finally, a pattern I observed early in my career around product building is that great products are built by people who enjoy questioning the status quo when it comes to how things are done. This can come in many forms, from the principles behind a product to the protocol design itself.

The industry is maturing out of the “Decentralization Theatre” era, where people would advocate for maximum decentralization and minimum trust assumptions, without considering the true value of these tradeoffs. A clear example is the proliferation of smart contracts that follow the “Upgradeability Pattern,” where logic can be upgraded against the “immutability” ethos. The rationale is very simple: immutability is not conducive to modern product building where you want to ship updates and improvements, without requiring thousands of users to migrate. Even if they do end up migrating, what was the point of the immutability in the first place? The tradeoff is simply not worth it, at least not for all use cases.

Another example is the proliferation of centralized sequencer designs, such as Base. People are realizing trust is a balancing act. It can be given or taken away and is not always black and white. The more you trust something, the more you get in return when it comes to performance. A simplified stack means a better UX and greater security, as the system is easier to inspect and reason through. The first users of crypto were decentralization maxis. To bring crypto to the masses there may need to be a balance struck between decentralization and simplicity.

Of course, this is nothing new. The first act in that direction is quite old. The DAO hack showed us that Ethereum may be quite decentralized, but not truly sovereign, as the community and network validators were able to coordinate a change to the protocol. Of course, the hack was an adversarial action, a declaration of war. In ancient Rome, democracy would be suspended in times of emergency and a single person was placed in charge to coordinate the government’s response. Similarly, democracy was suspended on Ethereum to respond to the hack. In the end, social consensus has all the power, whether we want to admit it or not. Ethereum is valuable and Ethereum Classic is not because the social consensus was that Ethereum is the canonical source of truth. Given the power of social consensus, adopting excess complexity to maximize decentralization and minimize trust may not always be the optimal approach.

With that in mind, I started thinking: “What if censorship can be used for good?” What if censorship is another tool that can be used in a variety of ways? What if censoring a transaction that is intended to break the intended behavior of a protocol is not bad censorship, but rather it honors the intended behavior of the creator of the smart contract? If the base layer is the public square where everyone can walk freely and interact in any way they want, a smart contract is my house and if people want to enter my house, they have to respect my rules.

These rules are currently encoded in the form of smart contract code, but sometimes it is not enough. Maybe we need an extra set of rules that work in tandem and ensure that the behavior of a smart contract aligns with the intended behavior. The opposite is exactly what a hack is. It’s when the business logic and protocol logic no longer align. A hack is simply a behavior that is valid according to the protocol logic but contradicts the intended business logic or expected functionality.

Products

So, here is what we are building at Phylax and why I am excited, (I am of course biased).

Phylax Credible Layer

The Credible Layer is our groundbreaking on-chain protocol designed to prevent hacks before they occur. It enables block builders, such as L2 sequencers, to guarantee transactions resulting in smart contract hacks won't be processed. Unlike traditional security measures that operate at the application layer or rely on post-hack detection, the Credible Layer embeds security directly into the block building process. This means that exploits are prevented before they can even be executed on-chain.

Here is how it works:

The Phylax Credible Layer offers a remarkably simple integration path for blockchain security: no new contracts needed, just validity rules defined once. At its core, smart contracts define potential "hacks" through EVM bytecode called Assertions, which leverage off-chain computation to enable functionality beyond typical on-chain limitations, including access to multiple states.

Block builders act as security enforcers, validating each transaction against these assertions through simulation before confirmation. If a transaction would result in a "hack state," it's automatically removed from the block. This creates a straightforward economic model where dApps pay security fees, and block builders ensure network security. Each assertion is completely transparent, making all security measures publicly verifiable.

The system leverages both economic and social incentives to create a robust security framework. Assertion Enforcers stake funds that can be slashed if they fail to prevent hacks, while also facing potential legal and reputational consequences due to the provable nature of their actions. The Credible Security will not increase the need for trust, but rather rely on the same block builders that the users already trust to confirm transactions. The Credible Layer introduces a novel market for security, where Assertion Submitters (security researchers and other security talent) can provide assertions and earn continuous rewards for their contributions to protocol security.

The system also introduces an innovative bounty mechanism where white-hat hackers can submit either a Proof of Possibility (PoP) to claim bounties for potential vulnerabilities, or a Proof of Realization (PoR) to demonstrate when an Assertion Enforcer has failed to prevent an exploit. By encoding security parameters as assertions, protocols can define precisely what constitutes a hack for their specific use case. This programmable approach to security allows for unprecedented customization while maintaining the simplicity of integration.

The system's modular design allows for various extensions, including integration with restaking protocols for improved capital efficiency, the potential for automated on-chain insurance mechanisms, and much else. This flexibility ensures the Credible Layer can evolve alongside the growing needs of the wider ecosystem.

The Credible Layer shifts the paradigm from "Trusted Security" to "Credible Security," addressing the issue of hacks at the base level rather than the application level. The value proposition extends across the blockchain ecosystem. Simply put, we believe the Credible Layer, when fully implemented, will bring about safer blockspace which will be a key differentiator across networks for users and dApps.

We're developing a proof-of-concept testnet, with a demo deployment planned for Q4 and an L2-operated testnet. This represents a new standard for blockchain integrity, embedding security into the network's core.

We will share more on the Credible Layer closer to its launch and if you are interested in getting on the waitlist as a network, dApp, security researcher, or in any other function please reach out here. You can also learn more about the Credible Layer on our website.

Community

Although the Credible Layer is in development I invite you to join our community where we will share all the latest updates.

We look forward to seeing you there and discussing Credible Security. Join us on Telegram.

Plans - Roadmap

We will be announcing some pretty big news in Q4. If you want to stay up to date, follow Phylax Systems or me on X.

Thanks for reading this.

Onward Φ

.png)

.png)